Microsoft has given administrators plenty of work to do with July’s security update that contains patches for a brutal 139 unique CVEs, including two that attackers are actively exploiting and one that’s publicly known but remains unexploited for the moment.

The July update contains fixes for more vulnerabilities than the previous two monthly releases combined and addresses issues that left unmitigated could enable remote code execution, privilege escalation, data theft, security feature bypass, and other malicious activities. The update included patches for four non-Microsoft CVEs, one of which is a publicly known Intel microprocessor vulnerability.

Lack of Details Heighten Urgency to Fix Zero-Days

One of the zero-day vulnerabilities (CVE-2024-38080) affects Microsoft’s Windows Hyper-V virtualization technology and allows an authenticated attacker to execute code with system-level privileges on affected systems. Though Microsoft has assessed the vulnerability as being easy to exploit and requiring no special privileges or user interaction to exploit, the company has given it only a moderate — or important — severity rating of 6.8 on the 10-point CVSS scale.

As is typical, Microsoft provided scant information on the flaw in its release notes. But the fact that attackers are already actively exploiting the flaw is reason enough to patch now, said Kev Breen, senior director threat research at Immersive Labs, in an emailed comment. “Threat hunters would benefit from additional details, so that they can determine if they have already been compromised by this vulnerability,” he said.

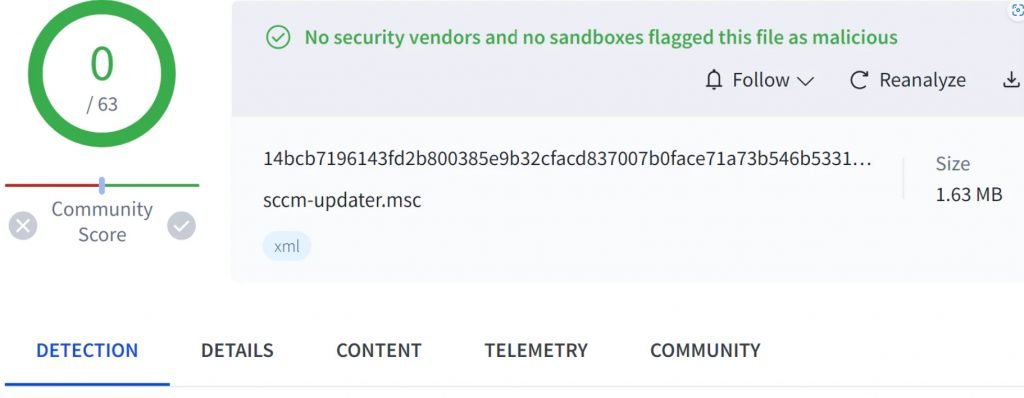

The other zero-day bug, tracked as CVE-2024-38112, affects the Windows MSHTML Platform (aka Trident browser engine) and has a similarly moderate CVSS severity rating of 7.0. Microsoft described the bug as a spoofing vulnerability that an attacker could exploit only by convincing a user to click on a malicious link.

That description left some wondering about the actual nature of the threat it represented. “This bug is listed as ‘spoofing’ for the impact, but it’s not clear exactly what is being spoofed,” Dustin Childs, head of threat awareness at Trend Micro’s Zero Day Initiative (ZDI), wrote in a blog post. “Microsoft has used this wording in the past for NTLM relay attacks, but that seems unlikely here.”

Rob Reeves, principal cybersecurity engineer at Immersive Labs, viewed the vulnerability as likely enabling remote code execution but potentially complex to exploit, based on Microsoft’s sparse description. “Exploitation also likely requires the use of an ‘attack chain’ of exploits or programmatic changes on the target host,” he said in prepared comments. “But without further information from Microsoft or the original reporter … it is difficult to give specific guidance.”

Other High-Priority Bugs

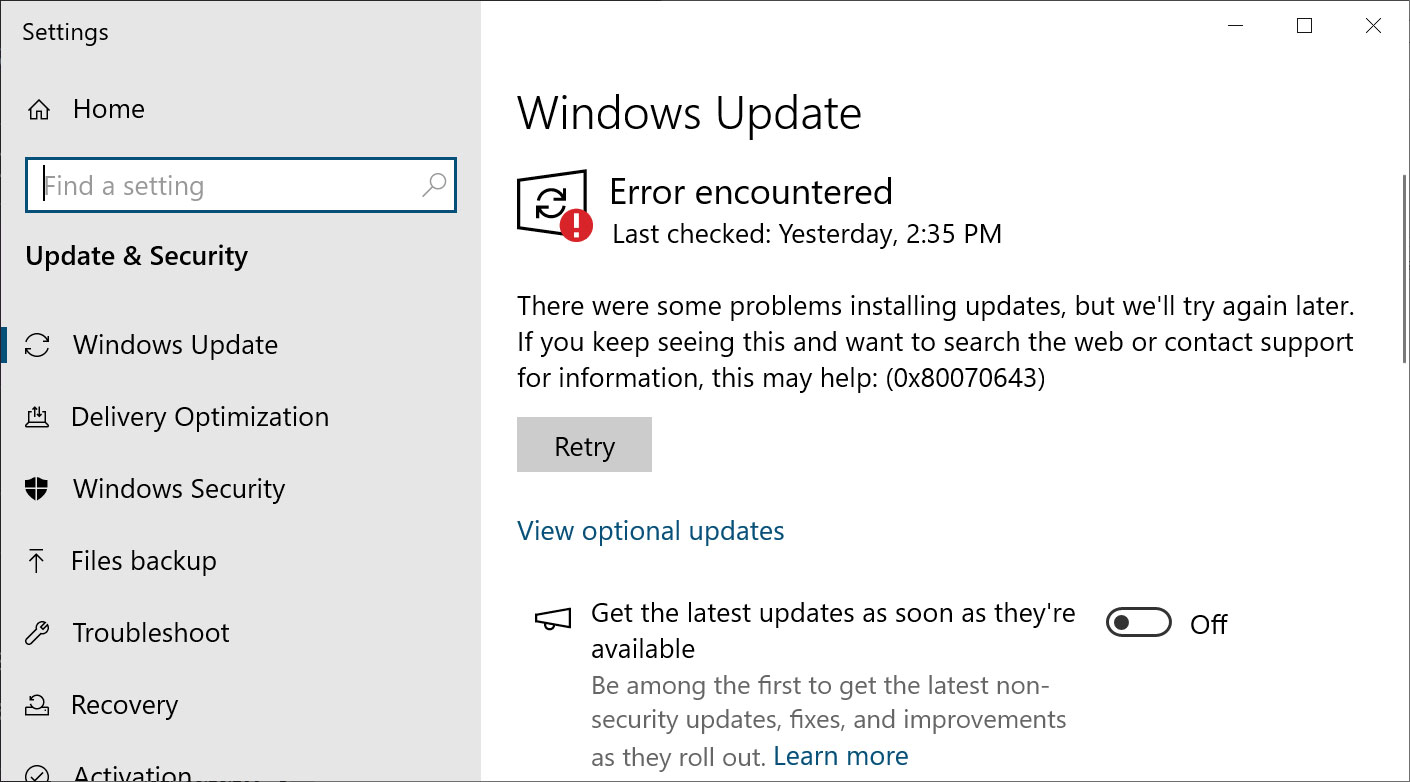

The two bugs that were publicly known prior to Microsoft’s July update — and hence are also technically zero-day flaws — are CVE-2024-35264, a remote code execution vulnerability in .Net and Visual Studio, and CVE-2024-37985, which actually is a third-party (Intel) CVE that Microsoft has integrated into its release.

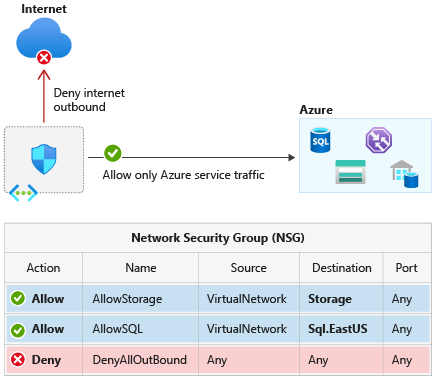

In all, Microsoft rated just four of the flaws in its enormous update as being of critical severity. Three are of them, each with a near maximum severity rating of 9.8 on 10, affect the Windows Remote Desktop Licensing Service component that manages client access licenses (CALs) for remote desktop services. The vulnerabilities, identified as CVE-2024-38076, CVE-2024-38077, and CVE-2024-38089, all enable remote code execution and should be on the top of the list of bugs to prioritize this month. “Exploitation of this should be straightforward, as any unauthenticated user could execute their code simply by sending a malicious message to an affected server,” Child said in his post.

Microsoft wants organizations to disable the Remote Desktop Licensing Service if they are not using it. The company also recommends organizations immediately install the patches for the three vulnerabilities even if they plan to disable the service.

One eyebrow-raising aspect in this month’s Microsoft security update is the number of unique CVEs that affect Microsoft SQL Server — some 39, or more than a quarter of the 139 disclosed vulnerabilities. “Thankfully, none of them are critical based on their CVSS scores and they’re all listed as ‘Exploitation Less Likely,'” saysTyler Reguly, associate director of security R&D at Fortra. “Even with those saving graces, there are still a lot of CVSS 8.8 vulnerabilities that SQL Server customers will be looking to patch,” he noted.

As has been the trend in recent months, there were 20 elevation of privilege (EoP) bugs in this month’s update, slightly outnumbering remote code execution vulnerabilities (18). Though Microsoft and other software vendors often tend to rate EoP bugs overall as being less severe than remote code execution vulnerabilities, security researchers have advocated that security teams pay equal attention to both. That’s because privilege escalation bugs often allow attackers to take complete admin control of affected systems and wreak the same kind of havoc as they would by running arbitrary code on it remotely.

Zero Day: Novice No More: Expose Software Vulnerabilities And Eliminate Bugs

This Is How They Tell Me the World Ends: The Cyberweapons Arms Race

InfoSec services | InfoSec books | Follow our blog | DISC llc is listed on The vCISO Directory | ISO 27k Chat bot

.webp)

.webp)