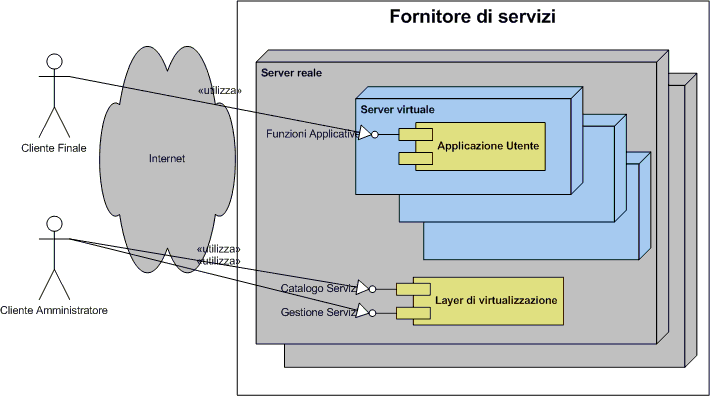

- Image via Wikipedia

Cloud Security and Privacy: An Enterprise Perspective on Risks and Compliance

The ENISA (European Network and Information Security Agency) released the Cloud Computing Risk Assessment document.

The document does well by including a focus on SMEs (Small and Medium sized Enterprises) because, as the report says, “Given the reduced cost and flexibility it brings, a migration to cloud computing is compelling for many SMEs”.

Three initial standout items for me are:

1. The document’s stated Risk Number One is Lock-In. “This makes it extremely difficult for a customer to migrate from one provider to another, or to migrate data and services to or from an in-house IT environment. Furthermore, cloud providers may have an incentive to prevent (directly or indirectly) the portability of their customers services and data.”

Remember that the document identified SMEs as a major market for cloud computing. What can they do about the lock-in? Let’s see what the document says:

The document identifies SaaS lock-in:

Customer data is typically stored in a custom database schema designed by the SaaS provider. Most SaaS providers offer API calls to read (and thereby ‘export’) data records. However, if the provider does not offer a readymade data ‘export’ routine, the customer will need to develop a program to extract their data and write it to file ready for import to another provider. It should be noted that there are few formal agreements on the structure of business records (e.g., a customer record at one SaaS provider may have different fields than at another provider), although there are common underlying file formats for the export and import of data, e.g., XML. The new provider can normally help with this work at a negotiated cost. However, if the data is to be brought back in-house, the customer will need to write import routines that take care of any required data mapping unless the CP offers such a routine. As customers will evaluate this aspect before making important migration decisions, it is in the long-term business interest of CPs to make data portability as easy, complete and cost-effective as possible.

And what about PaaS Lock-In?:

PaaS lock-in occurs at both the API layer (ie, platform specific API calls) and at the component level. For example, the PaaS provider may offer a highly efficient back-end data store. Not only must the customer develop code using the custom APIs offered by the provider, but they must also code data access routines in a way that is compatible with the back-end data store. This code will not necessarily be portable across PaaS providers, even if a seemingly compatible API is offered, as the data access model may be different (e.g., relational v hashing).

In each case, the ENISA document says that the customer must develop code to get around the lock-in, in order to bridge APIs and to bridge data formats. However, SME’s generally do not have developers on staff to write this code. “Writing code” is not usually an option for an SME. I know – I worked for an EDI service provider who serviced SMEs in Europe – we would provide the code development services for the SMEs when they needed data transformation done at the client side.

But there is another answer. This bridging is the job of a Cloud Service Broker. The Cloud Service Broker addresses the cloud lock-in problem head-on by bridging APIs and bridging data formats (which, as the ENISA document mentions, are often XML). It is unreasonable to expect an SME to write custom code to bridge together cloud APIs when an off-the-shelf Cloud Service Broker can do the job for them with no coding involved, while providing value-added services such as monitoring the cloud provider’s availability, encrypting data before it goes up to the cloud provider, and scanning data for privacy leaks. Read the Cloud Service Broker White Paper here.

2. “Customers should not be tempted to use custom implementations of authentication, authorisation and accounting (AAA) as these can become weak if not properly implemented.”

Yes! Totally agree. There is already a tendency to look at Amazon’s HMAC-signature-over-QueryString authentication scheme and implement a similar scheme which is similar but not exactly like it. For example, an organization may decide “Let’s do like Amazon do and make sure all incoming REST requests to our PaaS service are signed by a trusted client using HMAC authentication”, but omit to include any timestamp in the signed data. I can certainly imagine this, because this would happen all the time in the SOA / Web Services world (an organization would decide “Let’s make sure requests are signed using XML Signature by trusted clients”, but leave the system open to a simple capture-replay attack). Cloud PaaS providers should not make these same mistakes.

3. STRIDE and DREAD

Lastly, the document’s approach of examining the system in terms of data-at-rest and data-in-motion, identifying risks at each point (such as information disclosure, eavesdropping, or Denial-of-Service), then applying a probability and impact to the risks, is very reminiscent of the “STRIDE and DREAD” model. However I do not see the STRIDE and DREAD model mentioned anywhere in the document. I know it’s a bit long in the tooth now, and finessed a bit since the initial book, but it’s still a good approach. It would have been worth mentioning here, since it’s clearly an inspiration.

Related articles by Zemanta

- Five competitive differentiators for cloud services (news.cnet.com)

- The Future of collaboration platforms (vator.tv)

- Is IaaS (as a term) Doomed? (elasticvapor.com)

![Reblog this post [with Zemanta]](https://img.zemanta.com/reblog_e.png?x-id=88578027-8683-4d8f-b1b0-62f6261ce661)

![Reblog this post [with Zemanta]](https://img.zemanta.com/reblog_e.png?x-id=3a8e0883-feb2-4865-b29d-67dedeb875d5)

Cloud computing is the future of the computing, which happens to provide common business applications online that run from web browser and is comprised of virtual servers located over the internet. Basic idea behind cloud computing is the accessibility of application and data from any location as long as you are connected to the internet. Cloud computing makes the

Cloud computing is the future of the computing, which happens to provide common business applications online that run from web browser and is comprised of virtual servers located over the internet. Basic idea behind cloud computing is the accessibility of application and data from any location as long as you are connected to the internet. Cloud computing makes the![Reblog this post [with Zemanta]](https://img.zemanta.com/reblog_e.png?x-id=e7f7ca92-d521-4be7-b5c4-dfc3282c607f)

![Reblog this post [with Zemanta]](https://img.zemanta.com/reblog_e.png?x-id=44c6818b-40c1-4e78-9e2d-f4a92edb802a)