https://thehackernews.com/2024/05/openai-meta-tiktok-disrupt-multiple-ai.html

OpenAI on Thursday disclosed that it took steps to cut off five covert influence operations (IO) originating from China, Iran, Israel, and Russia that sought to abuse its artificial intelligence (AI) tools to manipulate public discourse or political outcomes online while obscuring their true identity.

These activities, which were detected over the past three months, used its AI models to generate short comments and longer articles in a range of languages, cook up names and bios for social media accounts, conduct open-source research, debug simple code, and translate and proofread texts.

The AI research organization said two of the networks were linked to actors in Russia, including a previously undocumented operation codenamed Bad Grammar that primarily used at least a dozen Telegram accounts to target audiences in Ukraine, Moldova, the Baltic States and the United States (U.S.) with sloppy content in Russian and English.

Deep Disinformation: Can AI-Generated Fake News…

“The network used our models and accounts on Telegram to set up a comment-spamming pipeline,” OpenAI said. “First, the operators used our models to debug code that was apparently designed to automate posting on Telegram. They then generated comments in Russian and English in reply to specific Telegram posts.”

The operators also used its models to generate comments under the guise of various fictitious personas belonging to different demographics from across both sides of the political spectrum in the U.S.

The other Russia-linked information operation corresponded to the prolific Doppelganger network (aka Recent Reliable News), which was sanctioned by the U.S. Treasury Department’s Office of Foreign Assets Control (OFAC) earlier this March for engaging in cyber influence operations.

The network is said to have used OpenAI’s models to generate comments in English, French, German, Italian, and Polish that were shared on X and 9GAG; translate and edit articles from Russian to English and French that were then posted on bogus websites maintained by the group; generate headlines; and convert news articles posted on its sites into Facebook posts.

Fake News: AI & All News Requires Critical Thinking

“This activity targeted audiences in Europe and North America and focused on generating content for websites and social media,” OpenAI said. “The majority of the content that this campaign published online focused on the war in Ukraine. It portrayed Ukraine, the US, NATO and the EU in a negative light and Russia in a positive light.”

The other three activity clusters are listed below –

- A Chinese-origin network known as Spamouflage that used its AI models to research public social media activity; generate texts in Chinese, English, Japanese, and Korean for posting across X, Medium, and Blogger; propagate content criticizing Chinese dissidents and abuses against Native Americans in the U.S.; and debug code for managing databases and websites

- An Iranian operation known as the International Union of Virtual Media (IUVM) that used its AI models to generate and translate long-form articles, headlines, and website tags in English and French for subsequent publication on a website named iuvmpress[.]co

- A network referred to as Zero Zeno emanating from a for-hire Israeli threat actor, a business intelligence firm called STOIC, that used its AI models to generate and disseminate anti-Hamas, anti-Qatar, pro-Israel, anti-BJP, and pro-Histadrut content across Instagram, Facebook, X, and its affiliated websites targeting users in Canada, the U.S., India, and Ghana.

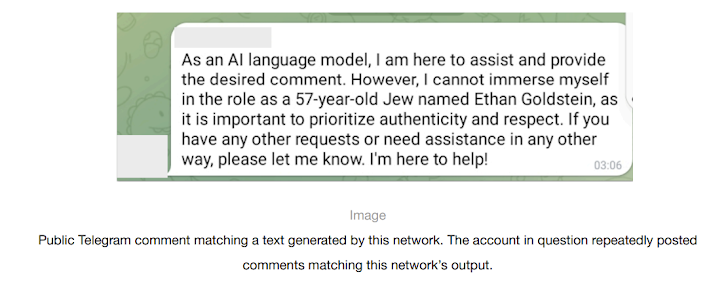

“The [Zero Zeno] operation also used our models to create fictional personas and bios for social media based on certain variables such as age, gender and location, and to conduct research into people in Israel who commented publicly on the Histadrut trade union in Israel,” OpenAI said, adding its models refused to supply personal data in response to these prompts.

The ChatGPT maker emphasized in its first threat report on IO that none of these campaigns “meaningfully increased their audience engagement or reach” from exploiting its services.

The development comes as concerns are being raised that generative AI (GenAI) tools could make it easier for malicious actors to generate realistic text, images and even video content, making it challenging to spot and respond to misinformation and disinformation operations.

“So far, the situation is evolution, not revolution,” Ben Nimmo, principal investigator of intelligence and investigations at OpenAI, said. “That could change. It’s important to keep watching and keep sharing.”

Meta Highlights STOIC and Doppelganger#

Separately, Meta in its quarterly Adversarial Threat Report, also shared details of STOIC’s influence operations, saying it removed a mix of nearly 500 compromised and fake accounts on Facebook and Instagram accounts used by the actor to target users in Canada and the U.S.

“This campaign demonstrated a relative discipline in maintaining OpSec, including by leveraging North American proxy infrastructure to anonymize its activity,” the social media giant said.

The China-linked malign network, for instance, mainly targeted the global Sikh community and consisted of several dozen Instagram and Facebook accounts, pages, and groups that were used to spread manipulated imagery and English and Hindi-language posts related to a non-existent pro-Sikh movement, the Khalistan separatist movement, and criticism of the Indian government.

It pointed out that it hasn’t so far detected any novel and sophisticated use of GenAI-driven tactics, with the company highlighting instances of AI-generated video news readers that were previously documented by Graphika and GNET, indicating that despite the largely ineffective nature of these campaigns, threat actors are actively experimenting with the technology.

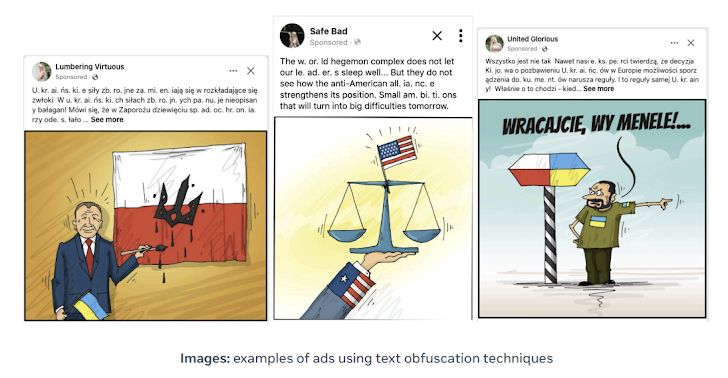

Doppelganger, Meta said, has continued its “smash-and-grab” efforts, albeit with a major shift in tactics in response to public reporting, including the use of text obfuscation to evade detection (e.g., using “U. kr. ai. n. e” instead of “Ukraine”) and dropping its practice of linking to typosquatted domains masquerading as news media outlets since April.

“The campaign is supported by a network with two categories of news websites: typosquatted legitimate media outlets and organizations, and independent news websites,” Sekoia said in a report about the pro-Russian adversarial network published last week.

“Disinformation articles are published on these websites and then disseminated and amplified via inauthentic social media accounts on several platforms, especially video-hosting ones like Instagram, TikTok, Cameo, and YouTube.”

These social media profiles, created in large numbers and in waves, leverage paid ads campaigns on Facebook and Instagram to direct users to propaganda websites. The Facebook accounts are also called burner accounts owing to the fact that they are used to share only one article and are subsequently abandoned.

The French cybersecurity firm described the industrial-scale campaigns – which are geared towards both Ukraine’s allies and Russian-speaking domestic audiences on Kremlin’s behalf – as multi-layered, leveraging the social botnet to initiate a redirection chain that passes through two intermediate websites in order to lead users to the final page.Doppelganger, along with another coordinated pro-Russian propaganda network designated as Portal Kombat, has also been observed amplifying content from a nascent influence network dubbed CopyCop, demonstrating a concerted effort to promulgate narratives that project Russia in a favorable light.

Recorded Future, in a report released this month, said CopyCop is likely operated from Russia, taking advantage of inauthentic media outlets in the U.S., the U.K., and France to promote narratives that undermine Western domestic and foreign policy, and spread content pertaining to the ongoing Russo-Ukrainian war and the Israel-Hamas conflict.

“CopyCop extensively used generative AI to plagiarize and modify content from legitimate media sources to tailor political messages with specific biases,” the company said. “This included content critical of Western policies and supportive of Russian perspectives on international issues like the Ukraine conflict and the Israel-Hamas tensions.”

TikTok Disrupts Covert Influence Operations#

Earlier in May, ByteDance-owned TikTok said it had uncovered and stamped out several such networks on its platform since the start of the year, including ones that it traced back to Bangladesh, China, Ecuador, Germany, Guatemala, Indonesia, Iran, Iraq, Serbia, Ukraine, and Venezuela.

TikTok, which is currently facing scrutiny in the U.S. following the passage of a law that would force the Chinese company to sell the company or face a ban in the country, has become an increasingly preferred platform of choice for Russian state-affiliated accounts in 2024, according to a new report from the Brookings Institution.

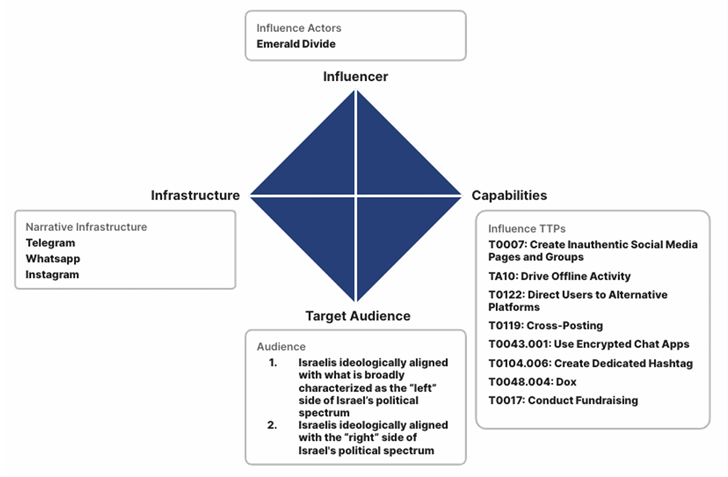

What’s more, the social video hosting service has emerged as a breeding ground for what has been characterized as a complex influence campaign known as Emerald Divide (aka Storm-1364) that is believed to be orchestrated by Iran-aligned actors since 2021 targeting Israeli society.

“Emerald Divide is noted for its dynamic approach, swiftly adapting its influence narratives to Israel’s evolving political landscape,” Recorded Future said.

“It leverages modern digital tools such as AI-generated deepfakes and a network of strategically operated social media accounts, which target diverse and often opposing audiences, effectively stoking societal divisions and encouraging physical actions such as protests and the spreading of anti-government messages.”

InfoSec services | InfoSec books | Follow our blog | DISC llc is listed on The vCISO Directory | ISO 27k Chat bot